Do you think all words have equal level of importance and weightage? Of course not. For example, the word "you" carries way less information than that of "nepotism". And important words will serve as index terms. Then you may ask why not index everything (full-text). The reasons are:

(1)Increase the level of noise(stopwords, eg - a, an, the, etc)

(2)Reduce the overall importance of a document

(3)Reduce indexing and retrieval performance

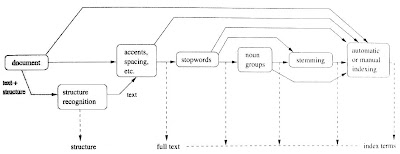

It is now clear that some kind of preprocessing task is needed on the documents to alleviate the above issues. Below represents a list of steps required to preprocess on the documents.

(1) Lexical Analysis

It means converting stream of characters into stream of words. In another term, it is identifying words. Four types of scenarios are needed to consider in doing lexical analysis: whether to consider numbers, hyphen, punctuation, case-sensitivity in indexing. For example, if you index all numbers up to 10 digits, then it may add 1 billion terms to your lexicon. So these four scenarios are really important in determing the size of your lexicon. By the way, Google knows every number. Have you ever tried typing your phone no in Google?

(2) Stopword Removal

Examples of stopwords are a, an, the, about, of, to, be, not etc. Considering stopwords removal, it will reduce your lexicon size by 40% or more. But doing so may not be able to find specialized words like "to be or not not be".

(3) Stemming

It is a kind of morphology. By doing so, it will reduce the no of words and improve performance. For example, whenever the indexer finds "hope, hoped, hop", it will reduce all to "hop" and store it in the lexicon - meaning there will be only one word "hop" in the lexicon. The most frequently used stemmer is "Porter Stemmer". It uses a 5-phase pattern matching rules to determine which affixes to strip in which order and when to apply repair strategies.

(4) Selection of Index Terms

A sentence contains nouns, pronouns, articles, verbs, adjectives, adverbs, connectives. We need to decide which one to index among them. Noun carries most semantics but sometimes you may want to cluster co-occuring nouns into phrases(n-grams, typically n=3, eg. Information Retrieval) and concepts (noun groups of distance n, typically n=3-5, eg. gates microsoft richest)

(5) Grouping Similar Terms Together

We can use Thesaurus and WordNet to group similar terms together. Eg. stupid, moronic, idiotic, retarded, spastic. Thesaurus is precompiled list of important words w for a given domain. WordNet is English Semantics Lexicon. It is a BSD lincensed open source software. WordNet groups nouns, verbs, adverbs, adjectives into synsets and create semantic relationship between synsets. Eg.

(3) Antonyms (NOT): stupid is not smart

(2) Hyponyms (ISA): chihuahua is a kind of dog

(1) Meronyms (HASA): dog has a mouth

The following diagram represents logical view of a document through out the various phases of text processing.

Below is a present song from me for you.

Stayed Tuned,

- Zaw Win Htike

2 comments:

So informative! Thanks!

Thank you

very helpfull

Post a Comment